Pandemic year a 'perfect storm' for online abuse, scams: WEF panel

Sign up now: Get ST's newsletters delivered to your inbox

Dealing with harmful information will require partnerships and proactive measures, the panellists said.

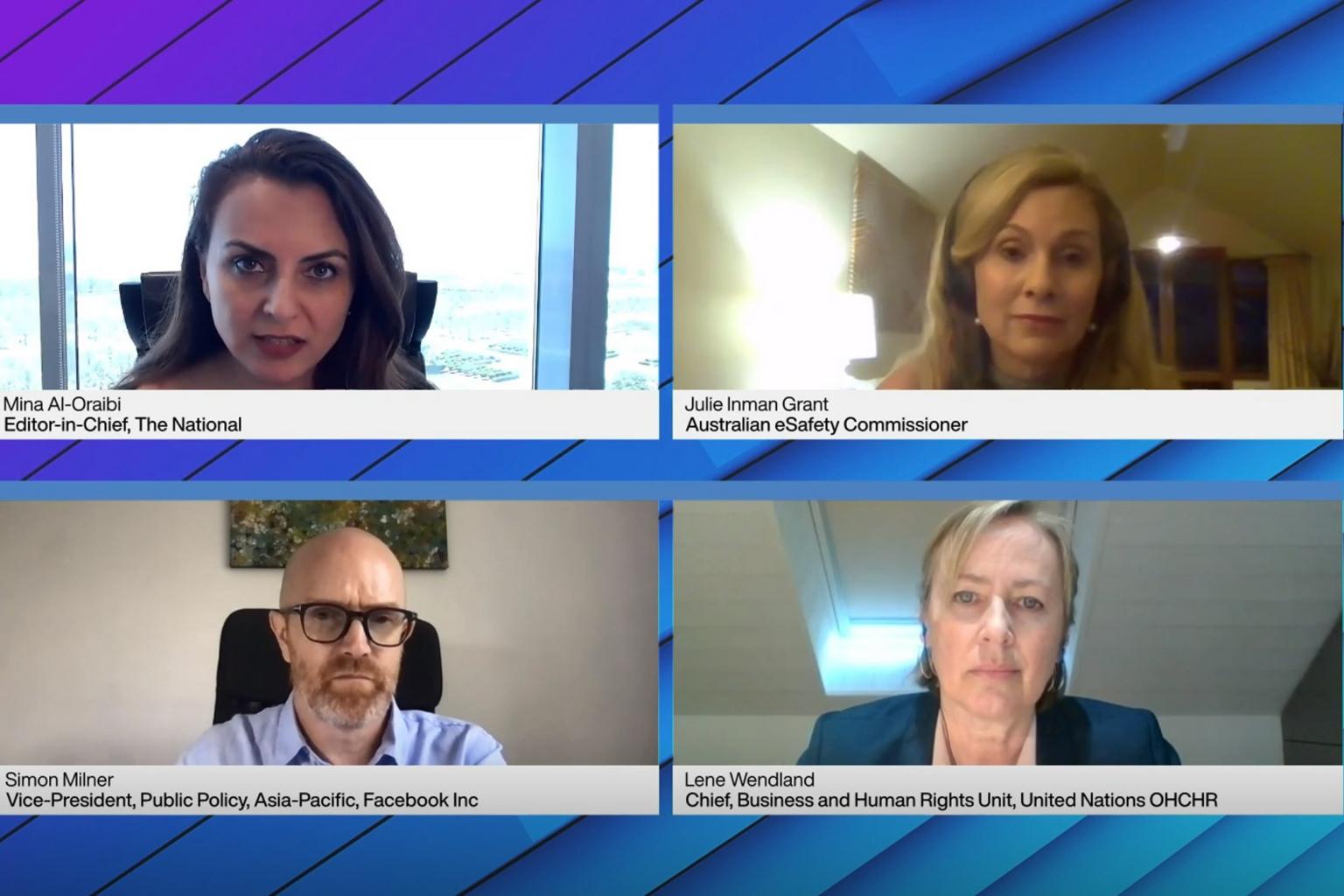

PHOTO: WORLD ECONOMIC FORUM

SINGAPORE - The year 2020 was a "perfect storm" as people took to the Internet in droves to work and socialise, and with that came a sharp jump in cases of abuse and scamming, highlighting the promise and perils of the proliferation of technology.

There is still a lack of clarity on what harmful content really means, technology often outpaces policy and regulations are not always the answer to everything, panellists said during a session on Wednesday (April 7) on Countering Harmful Information at the World Economic Forum's virtual Global Technology Governance Summit.

Their remarks reflected the conundrum in trying to deal with the growing use of technology, which is transforming lives at an unbelievable pace but also throwing up non-civic behaviour, criminal activity and malpractices that cause harm - intentionally or otherwise - and widen schisms in society.

Australia's eSafety commissioner Julie Inman Grant noted that all forms of abuse were supercharged during the year of the pandemic. There was a rise in child sexual material on the Web as well as youth-based cyber bullying and scamming.

"The Internet became an essential utility, to which people gravitated to work and socialise. You overlay that with all forms of humanity and you have a perfect storm," she said.

Australia's eSafety Commissioner promotes online safety education for young people, educators and parents, and allows those who come across harmful or offensive comments to report them.

Pointing out that the body was not into policing harmful material, and instead relied on citizens to report content, Ms Inman Grant said that while this was a safety net for people, there was a need for "digital platforms to erect digital guard rails".

Facebook's vice-president for public policy for the Asia-Pacific region Simon Milner said his company had been advocating regulation for a number of issues, but that the platform was not waiting for it to happen.

Facebook was designing technologies to find harmful content before others could see it, he said. Citing the example of using technology to detect hate speech online, Mr Milner said that three years ago only a quarter of the content could be found. Now, that has gone up to 97 per cent.

Pointing out that addressing the issues required investment in terms of time and money, he cautioned against a "one size fits all" policy. Larger platforms could be held to account but smaller firms should employ safety by design, he said.

"Don't regulate on the basis of technology, you will get it wrong, and don't try with a one-size-fits-all approach. It doesn't take into account the different nature of products or the fact that young people use services for different things," Mr Milner said.

"There is no perfect law out there. Countries are trying to figure it out, and hopefully soon we will get to a point where there will be something we can all get behind."

Ms Lene Wendland, chief of the business and human rights unit at the Office of the United Nations High Commissioner for Human Rights, said the speed of technology posed a challenge but the underlying principles to navigate the space were there.

Focusing on prevention, protection and proactive change were key to addressing inherent issues, Ms Inman Grant said.

Comparing the effort to tackle harmful content online to an arms race, Ms Inman Grant said tectonic shifts in technology required that the world stay a step ahead of the criminals weaponising technological platforms.

Taking up end-to-end decryption, she remarked: "How do you take down content when there's no owner? We need to think more actively about what are the unintended consequences."