Amazon unveils new AI chip in battle against Nvidia

Sign up now: Get ST's newsletters delivered to your inbox

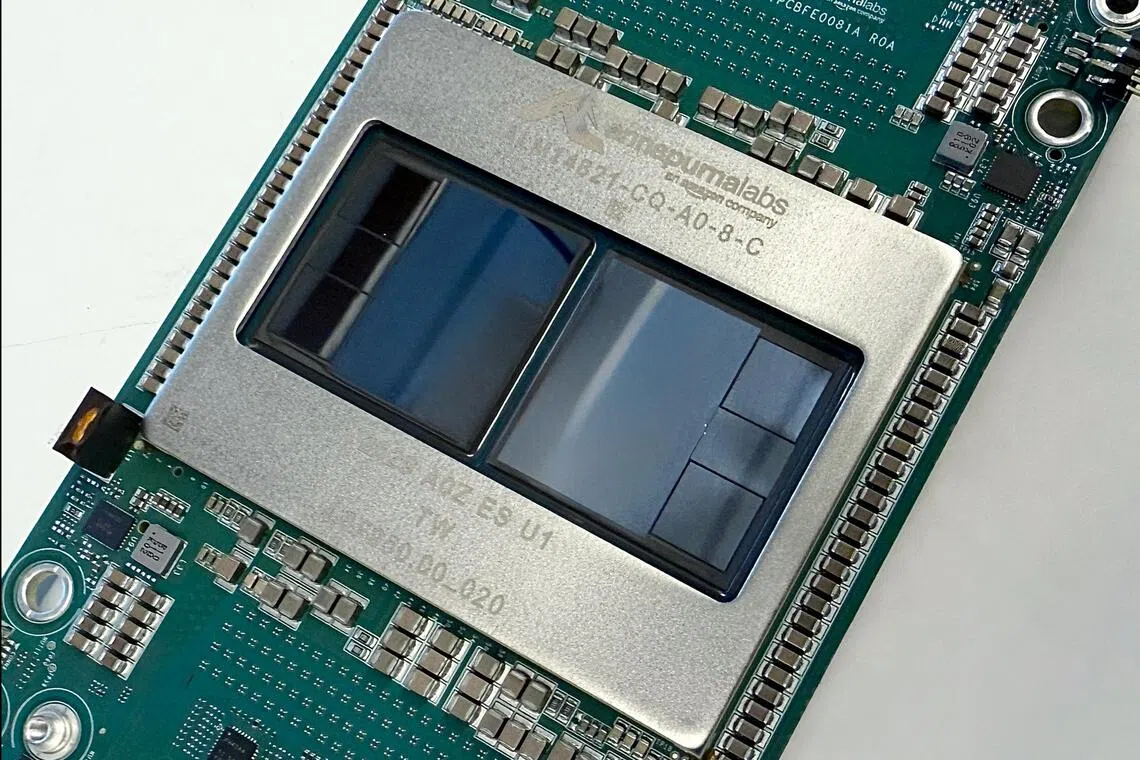

Amazon Web Services' Trainium3 AI chip is said to deliver over four times the computing performance of its predecessor while using 40 per cent less energy.

PHOTO: REUTERS

- AWS launched its Trainium3 AI chip, competing with Nvidia by offering lower costs and four times the performance of its predecessor.

- AWS claims Trainium3 reduces AI training and operating costs by 50%, democratising access and is already used by companies like Anthropic.

- AWS is developing Trainium4, which will support Nvidia's technology, while Nvidia stated it "is a generation ahead of the industry."

AI generated

SAN FRANCISCO - Amazon Web Services (AWS) launched its in-house-built Trainium3 AI chip on Dec 2, marking a significant push to compete with Nvidia in the lucrative market for artificial intelligence (AI) computing power.

The move intensifies competition in the AI chip market, where Nvidia currently dominates with an estimated 80 per cent to 90 per cent market share for products used in training large language models that power the likes of ChatGPT.

Google last week caused tremors in the industry

This followed the release of Google’s latest AI model in November that was trained using the company’s own in-house chips, not Nvidia’s.

AWS, which will make the technology available to its cloud computing clients, said its new chip is lower-cost than rivals and delivers more than four times the computing performance of its predecessor while using 40 per cent less energy.

“Trainium3 offers the industry’s best price performance for large-scale AI training and inference,” AWS chief executive Matt Garman said at a launch event in Las Vegas, Nevada.

Inference is the execution phase of AI, where the model stops scouring the internet for training and starts performing tasks in real-world scenarios.

Energy consumption is one of the major concerns about the AI revolution, with major tech companies having to scale back or pause their net-zero emissions commitments as they race to keep up on the technology.

AWS said its chip can reduce the cost of training and operating AI models by up to 50 per cent compared with systems that use equivalent graphics processing units, or GPUs, mainly from Nvidia.

“Training cutting-edge models now requires infrastructure investments that only a handful of organisations can afford,” AWS said, positioning Trainium3 as a way to democratise access to high-powered AI computing.

AWS said several companies are already using the technology, including Anthropic, maker of the Claude AI assistant and a competitor to ChatGPT-maker OpenAI.

AWS also announced it is already developing Trainium4, expected to deliver at least three times the performance of Trainium3 for standard AI workloads.

The next-generation chip will support Nvidia’s technology, allowing it to work alongside that company’s servers and hardware.

Amazon’s in-house chip development reflects a broader trend among cloud providers seeking to reduce dependence on external suppliers while offering customers more cost-effective alternatives for AI workloads.

Nvidia puzzled industry observers last week when it responded to Google’s successes in an unusual post on X, saying the company was “delighted” by the competition, before adding that Nvidia “is a generation ahead of the industry”. AFP