Grab calls for voice samples from users to fine-tune app feature for the visually impaired

Sign up now: Get ST's newsletters delivered to your inbox

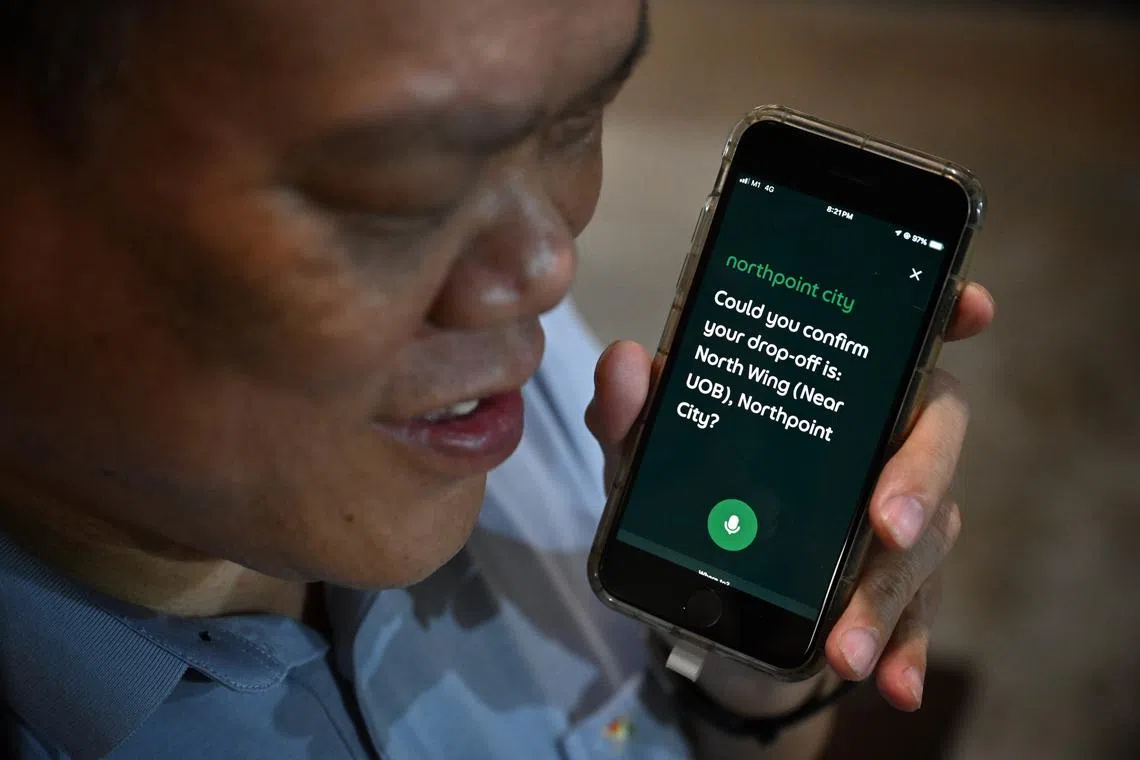

Mr Peter Lim, who is visually impaired, using Grab's voice assistant powered by AI to book a ride from his home to a nearby mall.

ST PHOTO: CHONG JUN LIANG

SINGAPORE – Getting a ride to his destination became easier for Mr Peter Lim in June, after Grab rolled out a voice assistant powered by artificial intelligence (AI) on its app.

Mr Lim, who is visually impaired, welcomed this development, since it meant he could book a ride simply by using his voice.

However, the 59-year-old call centre operator said that booking a ride to some places, such as Kalidasa Avenue and Lim Ah Pin Road, still poses a challenge.

The voice assistant has trouble at times understanding Singaporean speech patterns, so Mr Lim sometimes gives just the postal code of his destination.

Recognising this, Grab launched an open call in late June for voice samples from its users to fine-tune its AI model.

Built on OpenAI’s GPT-4.1 large language model and fine-tuned with 80,000 voice samples provided by Grab employees, the model’s accuracy in understanding Singaporean accents and names of places is already 89 per cent.

But further work needs to be done to account for the numerous ways in which locations across Singapore can be pronounced.

For instance, Hougang can be articulated with or without the letter “h”, while Clementi can be pronounced as “Kle-man-tee” or “Klair-men-tee”.

While there is no target set on how many voice samples Grab wants to collect by Dec 31, it aims to bring the accuracy up to 95 per cent, a Grab spokesperson told The Sunday Times.

“This involves having a greater variety and volume of voice samples that vocalise places of interest in different pitches, tones, accents and styles,” said the spokesperson.

The top 85 per cent most commonly selected locations in Singapore were chosen for the invited voice samples from users.

“Participants will be shown a randomised selection of places of interest from this list for them to vocalise the names accordingly,” said the spokesperson.

The company has already received nearly 10,000 voice recordings from users.

The voice assistant has been helpful, especially when combined with the app’s ability to suggest possible locations that the user might want to go to at different times of the day, said Mr Peter Lim.

ST PHOTO: CHONG JUN LIANG

Wallich Manor, 80 Dunbar Walk, and 31 Jalan Mutiara Latitude were among locations that this ST reporter was asked to record herself saying to improve speech pattern recognition on the app.

The voice assistant has been helpful, especially when combined with the app’s ability to suggest possible locations that the user might want to go to at different times of the day, said Mr Lim.

“It will ask me if I want to go home, or if I want to go to church on Sunday mornings. If I say no, I can tell it where I want to go instead,” he added.

“It is around 90 per cent accurate in understanding me so far.”

The AI voice assistant is available to users with the talkback feature enabled on their phones.

Members of the Singapore Association of the Visually Handicapped (SAVH) participated in focus group discussions and were involved in testing the feature before it was rolled out.

Ms Lyn Loh, who heads SAVH’s accessibility services department, said the voice assistant was initially not very responsive when given voice commands during the testing phase. It took a few tries for it to understand what she was saying.

“But now, it is much better,” said Ms Loh.

The feature will be very useful if it can be used to order food delivery via the app as well, said Ms Loh, who added that she has given feedback to Grab about this.

During the Covid-19 pandemic, she had to learn how to order food delivery, as she could not see the markings pasted on floors and seats at food establishments that dictated where people could queue and sit. “We never know when the next pandemic will happen,” said Ms Loh.

Grab also tested the feature’s performance during the developmental phase by benchmarking it against Meralion – an AI model that can understand at least eight regional languages

The voice assistant has trouble at times understanding Singaporean speech patterns.

ST PHOTO: GAVIN FOO

“While both models are designed for Singlish-speaking users, Meralion focuses on natural language understanding, while Grab’s voice assistant is tailored specifically to help users identify locations and points of interest in Singapore,” said Grab’s spokesperson.

Recordings collected are encrypted and stored for a year in a secure server, and are not linked to any personal identifiers such as the user’s name or mobile number, said the spokesperson.

Mr Lim and Ms Loh expressed hope that this voice assistant will help not only the visually impaired, but also the elderly and people with physical disabilities.

“If it is improved, it can help many other people that just want to talk and not type,” said Mr Lim.