SINGAPORE - Local start-up BasisAI is preparing to go regional with "responsible" artificial intelligence, on the back of a collaboration with Facebook and the Infocomm Media Development Authority (IMDA).

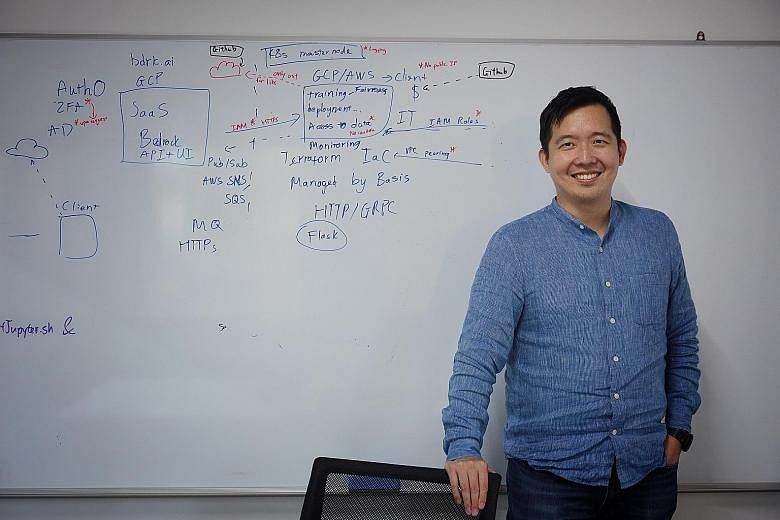

BasisAI's machine-learning Bedrock solution serves as a cyber auditor of AI algorithms by making their decision-making processes more transparent, and helping data scientists identify when an algorithm might be unfairly biased.

The company recently partnered Facebook and IMDA to mentor 12 other start-ups in the Asia-Pacific region to prototype ethical and efficient AI algorithms in a six-month programme that ended last month.

This programme was part of Facebook's Open Loop initiative, which aims to work with policymakers and tech companies around the world to develop regulatory frameworks for emerging technologies like AI.

BasisAI has inked a partnership with one of the 12 start-ups - Indonesian computer vision start-up Nodeflux - to further develop the explainability of the latter's facial recognition and object detection algorithm.

"We really want to partner with ambitious companies in financial services, healthcare and transportation across the region," said BasisAI chief executive Liu Fengyuan.

"We're seeing greater social awareness about responsible AI, and I think more companies are concerned now about the reputation risk from adopting AI that is a black box."

Last August, for example, the United Kingdom's Home Office abandoned a controversial AI algorithm that was used to process immigration visa applications, after campaigners decried the algorithm as "racist" for allegedly favouring certain nationalities over others.

The Home Office offered no explanation of how its AI tool worked at the time, and the algorithm was scrapped a week before a court's judicial review of its decision-making.

Responsible AI has also been at the centre of an ongoing spat between Google and its employees, which has led to the departure of two prominent AI researchers.

"A very simple example of responsible AI is Netflix's 'Because You Watched' (tab) that tells you, because you watched Wonder Woman, that's why we're recommending you these other shows," said Mr Liu.

"It takes away a bit of the creep factor when you are told (your viewing history) is one of the key contributors why these recommendations are shown to you, and not because something else is being tracked."

Bedrock was launched last June, less than two years after Mr Liu founded BasisAI with twin brothers Linus and Silvanus Lee in 2018.

Mr Liu was previously the director of the data science and AI division at the Government Technology Agency, while his two US-based co-founders have worked in data science roles at Twitter, Uber and Dropbox.

The company is currently working with DBS Bank, Accenture and PwC, among other partners.

Mr Liu challenged the perception that developing responsible AI is an extra step that involves additional effort.

"If you don't do anything, then when an audit does come, you're left having to dig up e-mails and all that stuff," he said.

"But if you're checking the code into (Bedrock) as you work, then it's doing the logging and auditing for you.

"It's about starting with the right foundation, where governance isn't an afterthought."

More transparency about AI 'black box'

Artificial intelligence (AI) algorithms are designed to do their work unnoticed in the background, accomplishing laborious tasks and making simple decisions on behalf of their human designers.

But as the adoption of AI has accelerated and evolved, so too has the scrutiny on the opaque decision-making processes of such algorithms, especially when the decisions are perceived as prejudiced or unfair.

"A lot of times (companies) don't pursue AI initiatives because of concerns over transparency and explainability," BasisAI chief executive Liu Fengyuan said. "They say, 'Hey, you're giving me a black box that I don't understand, and I'm not going to use it'."

That is where BasisAI's Bedrock solution comes in, to turn AI "black boxes" into glass ones, where the inner workings of the algorithms can be clearly seen and explained.

A data scientist runs his algorithm through the Bedrock software as he codes.

Bedrock then flags all the metrics, used by the AI algorithm to make its decisions, which could run the range from age and gender to financial status and health allergies, and also how much weight it gives each metric.

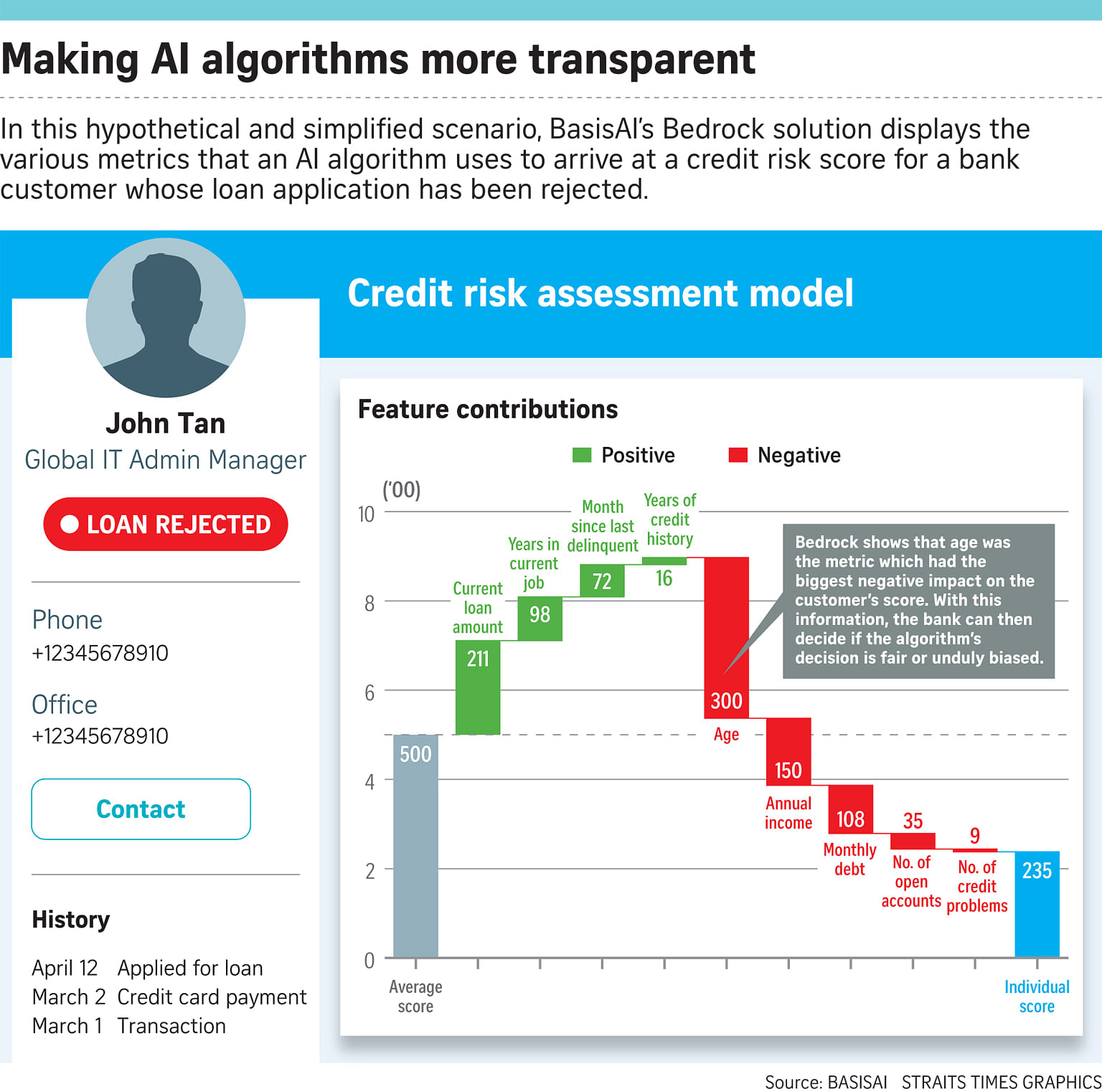

For instance, a bank's AI algorithm could have given a certain risk score to a customer applying for a loan.

Using Bedrock, the bank's technical team would be able to see the metrics that determined the score.

The customer's age might have boosted the score, while the number of previous credit problems might have lowered it. The bank's team can then identify and correct any unwanted biases in the algorithm.

Mr Liu said Bedrock's insights are targeted at decision-makers in firms with technical expertise such as the chief data scientist or chief technology officer for now.

"But over time, we want to make these reports a lot more accessible to the lay person because decisions aren't just made by chief data scientists, but also by the people at risk," he added.