Would you trust a robot to operate on your eyeball?

Sign up now: Get ST's newsletters delivered to your inbox

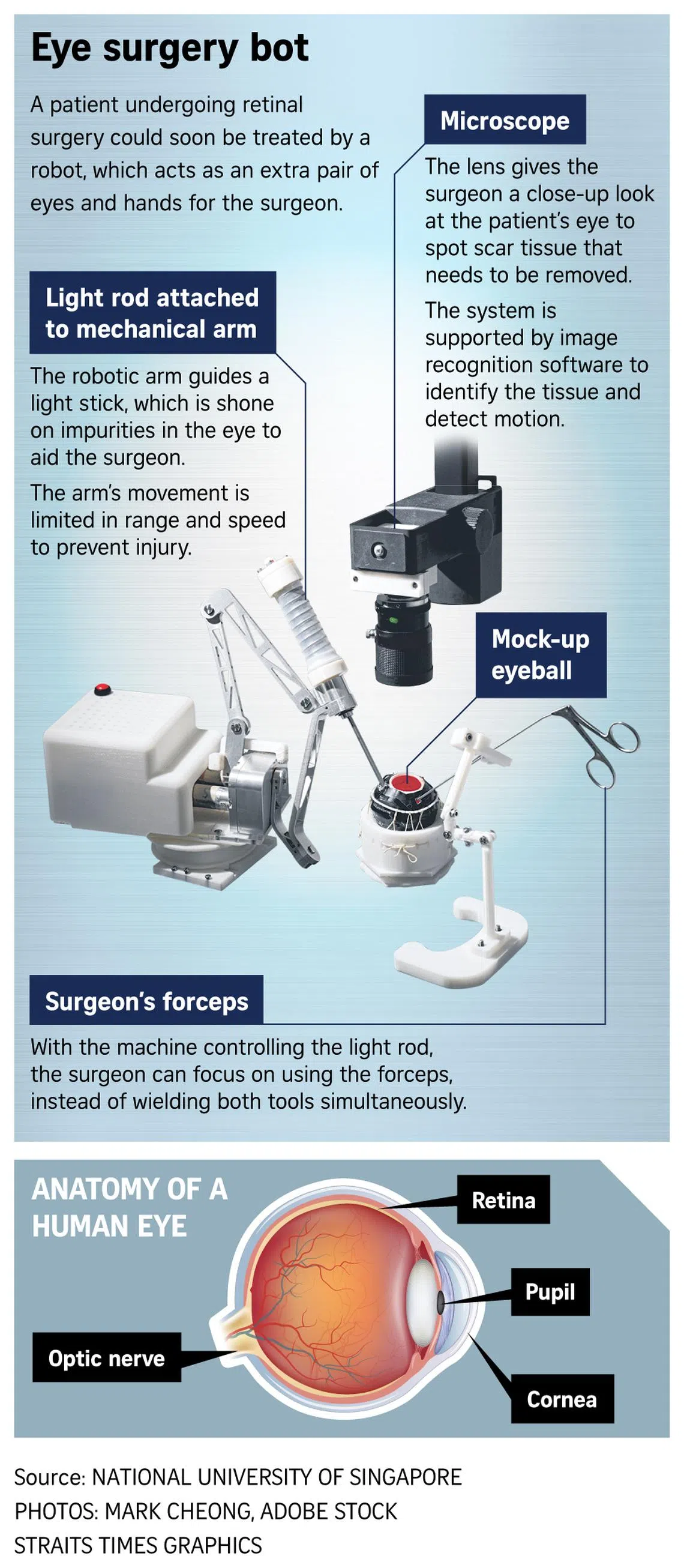

The robot-assisted microsurgical system uses artificial intelligence to locate anomalies in the eye and has precise tool-handling.

ST PHOTO: MARK CHEONG

SINGAPORE - Popular horror, as depicted in the likes of video game Dead Space and blockbuster movie Final Destination 5, reflects a fear of machines operating on humans.

An infamous surgical sequence in Dead Space 2, for instance, vividly illustrates the potential for horrific consequences if a player in the game misaligns a surgical needle in a procedure, resulting in a gruesome outcome.

Researchers from the National University of Singapore are building a robot assistant for eye surgeons that aims to overcome such concerns, as these robots can be of great use in high-precision microsurgery, where there is little to no room for error.

The device, called the Beam-a-bot, is slated for tests on animal subjects in the next three years, before it is put through clinical trials at the National University Hospital, with the objective of eventually assisting surgeons in operations.

The system uses artificial intelligence to locate anomalies in the eye and has precise tool-handling.

The eye surgery bot was among 30 robotic projects showcased by the College of Design and Engineering on March 8 during NUS’ annual open house.

The school’s largest robotic display showcases projects that started off from research initiatives, student creations and start-ups affiliated with NUS. The college, which opened in 2022, will also introduce a new four-year Robotics and Machine Intelligence degree in August to meet the rising demand for more specialised engineering programmes, said college dean Teo Kie Leong.

The school aims to provide projects like the eye surgery bot with a pathway to commercialisation and deployment outside of the lab, Professor Teo told The Straits Times during a preview on March 4.

Associate Professor Chui Chee Kong, who leads the team of researchers developing the eye surgery device, said it is intended to act as an extra pair of eyes and hands for surgeons, who have the tricky task of operating a light stick and forceps simultaneously to remove tissue within an eyeball.

Surgeons have to work with extreme care as any slip-up can result in injury, said Prof Chui.

“In fact, some surgeons practise using chopsticks with both hands, so that they are able to use the operating tools confidently.”

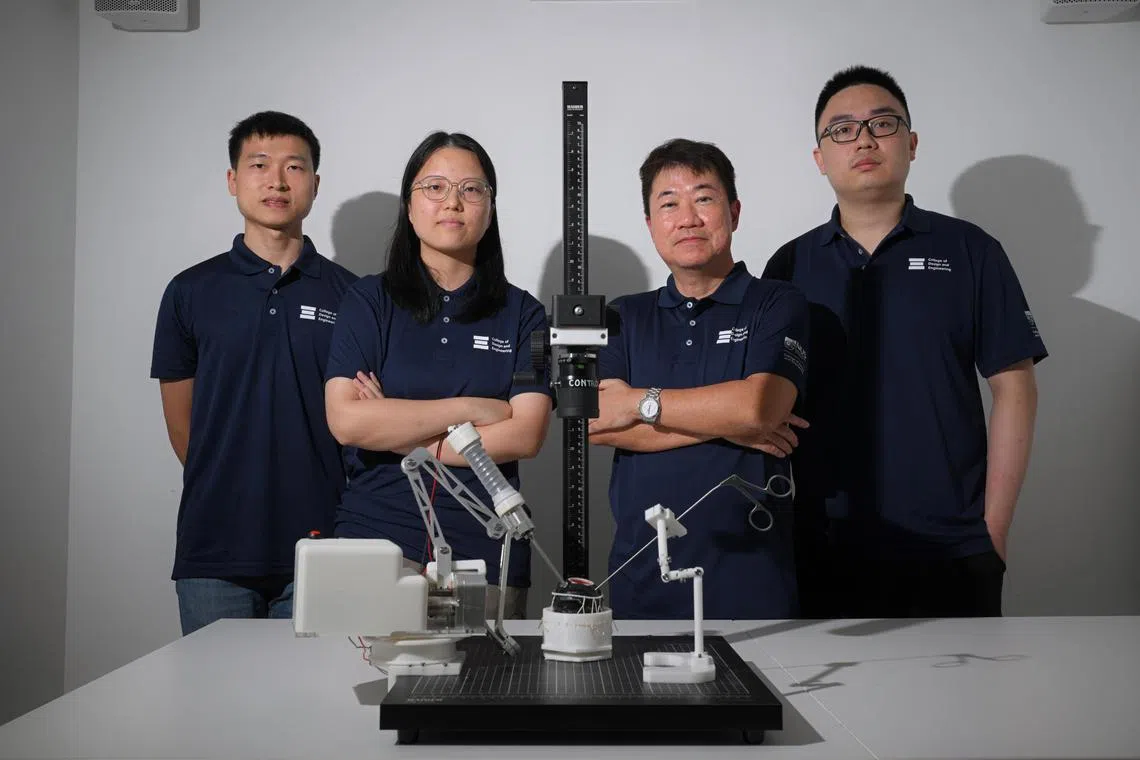

(From left) The NUS team behind the AI-powered robot-assisted microsurgical system include mechanical engineering PhD student Zhang Ziwei, 33, research engineer Lin Wenjun, 26, mechanical engineering associate professor Chui Chee Kong, 57, and mechanical engineering PhD student Zhang Wending, 25.

ST PHOTO: MARK CHEONG

The set-up includes a robotic arm, which moves a light rod inserted into the eyeball, while a digital microscope sends footage to the surgeon’s computer as he makes delicate movements to snip out damaged tissue or impurities.

The system’s software, developed by the NUS team, uses image recognition technology that has above 85 per cent accuracy, based on the team’s early tests.

The device is eventually intended to be used at NUH first in laparoscopic operations performed on the abdomen, which have a larger margin for error than surgery on the eye, said Prof Chui.

Robotic tools have been used in other forms of eye operations like laser vision correction or cataract surgery, but remain in development for operations within the eyeball or the retina towards the back of the eye, which require high levels of precision. Researchers at Oxford University performed the first in-eye operation using a robot in 2016 to remove a wrinkled membrane from a patient’s eye.

Prof Chui hopes the NUS project can pave the way for robotic assistants in other forms of microsurgery – a general term for surgery requiring an operating microscope – including operations on blood vessels and nerves.

The project joins a list of other health-related prototypes on display at NUS, like a high-tech glove lined with sensors that automatically record a patient’s performance during rehabilitation, reducing assessment time. Another project group developed a robotic arm that can be fitted onto wheelchairs to allow users with muscle degeneration to perform basic tasks, like tapping a transport card at an MRT station gantry.

Outside of healthcare, a graduate research project showcased a range of robots in the form of a dog, a spider and a rover on wheels, which can be deployed across various kinds of terrain for inspection.

A graduate research project showcased a range of robots in the form of a dog, spider and a rover on wheels.

ST PHOTO: MARK CHEONG

They are powered by digital mapping technology developed by the team that can render a construction site or facility in 3D in an instant, said senior lecturer Justin Yeoh. This technology has been deployed for inspecting the condition of staircases in flats, among other trials. The project is on track to be spun off into a start-up in the coming months.

Students also showcased BoxBunny, a large, 2m-tall robot with foam arms that delivers surprise jabs and hooks, allowing boxers to practise striking its head and torso while working on their reaction times.

Mr Mageshkumar Kirubasankar, 26, who was in charge of the mechanical design of the project, demonstrates the BoxBunny, a boxing training robot.

ST PHOTO: MARK CHEONG

The inspiration came from teammate Muhammad Zakir Haziq, who attends boxing classes, after he and his friends were unable to train in person during the Covid-19 pandemic.