74% of internet users encounter harmful content like cyber bullying: MDDI survey

Sign up now: Get ST's newsletters delivered to your inbox

There was also a notable increase in encounters with content inciting racial or religious tension, and violent content.

PHOTO: ST FILE

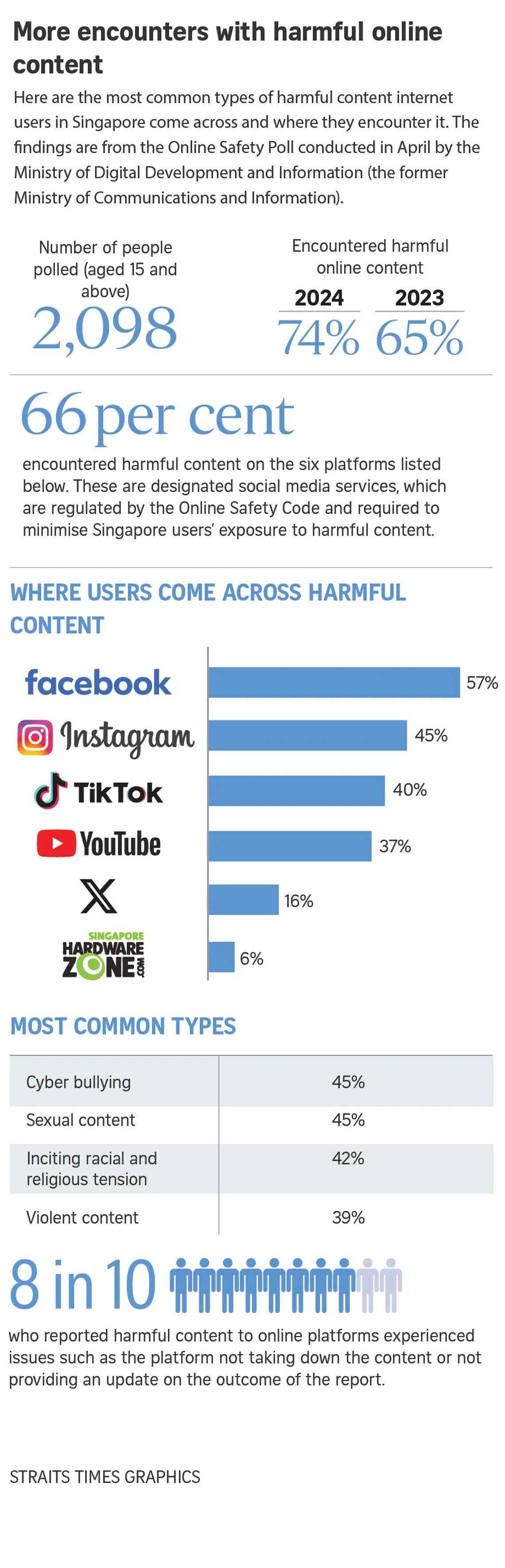

SINGAPORE - More internet users here are encountering harmful content such as cyber bullying or sexual content online, with the number rising to 74 per cent of those polled in an April survey, up from 65 per cent in 2023.

But only about a quarter of them report it.

The 2024 survey was conducted by the Ministry of Digital Development and Information (MDDI).

Of the harmful content encountered, there was also a notable increase on social media in content that incited racial or religious tension, and in violent content. These went up by 13 percentage points and 19 percentage points, respectively, compared with 2023.

The survey of 2,098 people in Singapore aged 15 and above found that 66 per cent of respondents had come across harmful content on designated social media services, which include platforms such as Facebook and HardwareZone.

This is up from 57 per cent in 2023, when the first such survey was conducted by the then Ministry of Communications and Information.

The increase took place despite the introduction of measures aimed at reducing users’ exposure to harmful content online.

The designated social media services are six platforms – Instagram, TikTok, X, YouTube, Facebook and HardwareZone – with significant reach or impact that are required to comply with the Infocomm Media Development Authority’s Code of Practice for Online Safety, which came into effect in July 2023.

The code requires that these services minimise Singapore users’ exposure to harmful content, with additional protection for children, among other measures.

The Online Safety Poll aims to understand the experiences of internet users here who had encountered harmful online content, as well as the actions respondents had taken to address such content.

Apart from social media, the survey found that 28 per cent of respondents had encountered harmful content on other platforms, such as messaging apps, news websites and gaming platforms.

Only about 27 per cent of respondents reported harmful content to the platforms, while 61 per cent ignored it and 35 per cent blocked the offending account or user.

Among those who made reports, about 80 per cent experienced issues with the reporting: The platform did not take down the content or disable the account responsible; did not provide an update on the outcome of the report; or allowed the removed content to be reposted.

Those who did not report harmful content cited reasons such as seeing no need to take action or believing that making a report would not make a difference.

Facebook and Instagram, both owned by tech giant Meta, were the social media platforms where the most survey respondents came across harmful content – 57 per cent and 45 per cent, respectively.

“While the prevalence of harmful content on these platforms may be partially explained by their bigger user base compared with other platforms, it also serves as a reminder of the bigger responsibility these platforms bear,” MDDI said in a media statement.

Facebook and Instagram were followed by TikTok (40 per cent), YouTube (37 per cent), X (16 per cent) and HardwareZone (6 per cent).

The most common types of harmful content faced on designated services were cyber bullying and sexual content, both of which were experienced by 45 per cent of respondents.

The ministry pointed out a “notable increase” in encounters on such services with content that incited racial or religious tension – which stood at 42 per cent – as well as violent content, experienced by 39 per cent of respondents.

Dr Carol Soon, principal research fellow at the Institute of Policy Studies, said the increase in encounters with harmful content online is a worldwide phenomenon.

“As people spend more time online, their chances of being exposed to content that is harmful, inappropriate or offensive inevitably increase,” she said, adding that there is now greater access to photo and video editing tools to create content such as deepfake pornography.

Dr Soon, who is also vice-chair of the Media Literacy Council, said online platforms need to up their game by shortening their response time to reports of harmful content, as well as better leveraging technology such as artificial intelligence to detect such content.

“The designated social media services are due to submit their annual online safety reports soon – that would help identify what more needs to be done,” she added.

MDDI said that addressing the “complex, dynamic and multifaceted nature” of online harms requires the Government, industry and people to work together.

It pointed to measures taken to protect people in Singapore from online harms, such as the announcement earlier in July that a Code of Practice for App Distribution Services will be introduced, requiring designated app stores to implement age assurance measures.

“Beyond the Government’s legislative moves, the survey findings showed that there is room for all stakeholders, especially designated social media services, to do more to reduce harmful online content and to make the reporting process easier and more effective,” the ministry said.