For subscribers

Fakes are good business, don’t expect AI to stop generating them

Verifying misinformation will only become harder, but consumers can’t afford to get complacent.

Sign up now: Get ST's newsletters delivered to your inbox

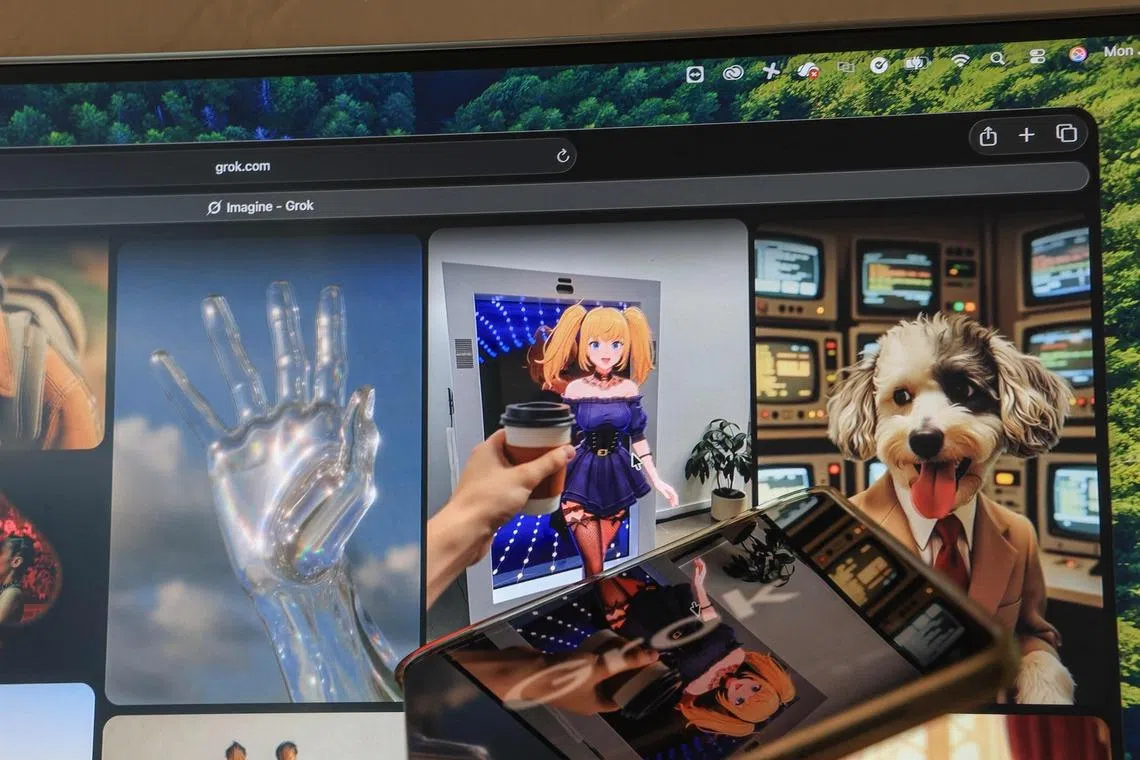

The recent uproar over X’s Grok image-generation tool offers a stark example of how commercial incentives can undermine content policing, says the writer.

PHOTO: AFP

Hands up if you fell for the AI-generated video of the “emotional support kangaroo” a woman was trying to bring on board her flight, or if an image shared on your WhatsApp chat triggered heated disputes over whether it was AI slop. And if you now find yourself routinely verifying stories that sound so outrageous, an AI tool could have hallucinated them.

Such is our lived reality in a digitally connected world where the capabilities of generative AI have been unleashed without due safeguards. Again and again, we consumers must personally undertake due diligence on content we see or receive, even as we grapple with information overload. But is placing this burden on consumers fair?