Japan aims to develop AI translation system that reads facial expressions by 2020

Sign up now: Get insights on Asia's fast-moving developments

Japan's Internal Affairs and Communications Ministry expects the system to be used for teleconferencing between Japan and other countries and for translating online videos.

PHOTO: THE YOMIURI SHIMBUN/ASIA NEWS NETWORK

Follow topic:

TOKYO (THE YOMIURI SHIMBUN/ASIA NEWS NETWORK) - Japan's Internal Affairs and Communications Ministry plans to develop by around 2020 a more precise automatic translation system utilising artificial intelligence (AI) that reads facial expressions, it has been learned.

The system will analyse a speaker's facial expressions and scan the environment they are in to determine the context of the situation, resulting in translated dialogue that reflects feelings such as delight, anger, sorrow and pleasure.

The ministry aims to use the system to support companies' moves to expand overseas, as well as businesses catering to foreign tourists visiting Japan.

The ministry expects the system will be used for tele-conferencing between Japan and other countries and for translating online videos.

The system will be developed by the National Institute of Information and Communications Technology (NICT), which is under the ministry's jurisdiction, and made available for companies and individuals.

An existing AI-based automatic translation system recognises speech and converts it into text before translating it.

The translated text is then converted back into speech.

It is difficult for the current system to accurately recognise and translate every single word that is spoken, meaning a certain level of mistranslation is unavoidable.

The envisaged AI technology will be capable of scanning environments to collect more information, such as facial expressions, the date and time, and the place a conversation is taking place, making it possible to generate more accurate translations.

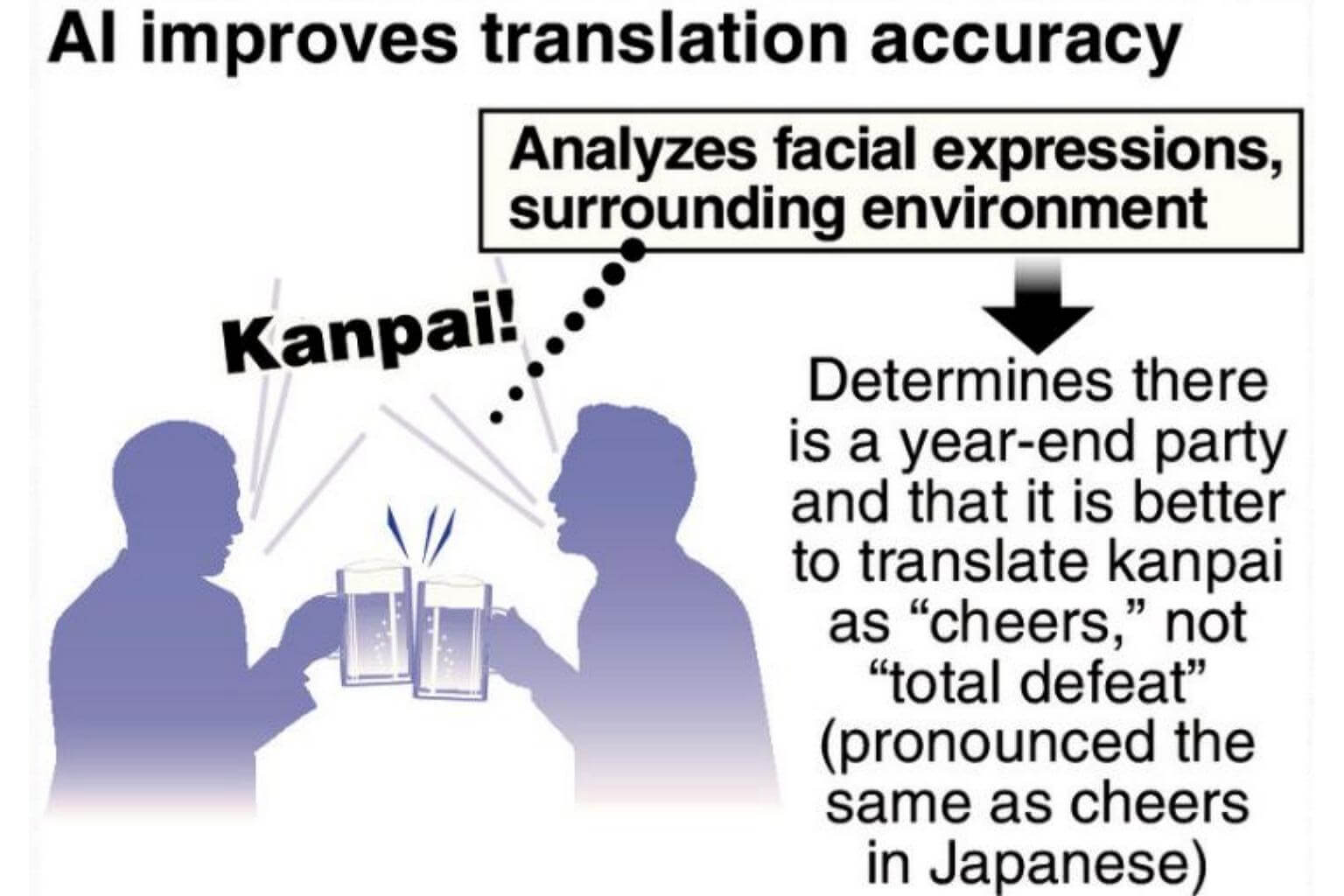

For example, the phrase "kanpai" has several meanings in Japanese with the same pronunciation, and so can be translated differently.

However, if "kanpai" was said at a year-end party, for example, the new system's AI would analyse the environment and recognise that it means "cheers".

If there are words the AI cannot catch, it will guess by reading the speaker's lips.

The NICT will also develop technology to select more appropriate words for translation by judging facial expressions.

"Shizuka ni" in Japanese will be translated by taking into account the speaker's feelings, such as "please be quiet" if said with a gentle expression, or "shut up" if said angrily.

IT giant Google substantially improved the accuracy of its automatic translation services in 2016 by introducing the latest AI technology. The phenomenon was dubbed the "Google shock".

The NICT has developed its own automatic translation system and taken the lead in technology for translating Japanese by utilising its abundant translation data.

By improving translation accuracy with the new AI technology, the ministry is aiming to help develop automatic translation-related products and services that can be used at home and abroad.