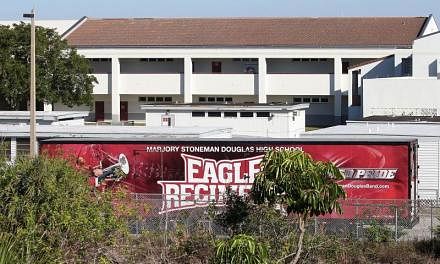

SAN FRANCISCO (NYTIMES) - One week after the school shooting in Parkland, Florida, Facebook and YouTube vowed to crack down on the trolls.

Thousands of posts and videos had popped up on the sites, falsely claiming that survivors of the shooting were paid actors or part of various conspiracy theories. Facebook called the posts "abhorrent." YouTube, which is owned by Google, said it needed to do better. Both promised to remove the content.

The companies have since aggressively pulled down many posts and videos and reduced the visibility of others. Yet on Friday (Feb 23), spot searches of the sites revealed that the noxious content was far from eradicated.

On Facebook and Instagram, which is owned by Facebook, searches for the hashtag #crisisactor, which accused the Parkland survivors of being actors, turned up hundreds of posts perpetuating the falsehood (though some also criticised the conspiracy theory). Many of the posts had been tweaked ever so slightly - for example, videos had been renamed #propaganda rather than #hoax - to evade automated detection. And on YouTube, while many of the conspiracy videos claiming that the students were actors had been taken down, other videos that claimed the shooting had been a hoax remained rife.

Facebook faced renewed criticism Friday after it was revealed that the company showcased a virtual reality shooting game at the Conservative Political Action Conference this week. Facebook said it was removing the game from its demonstration of its new virtual reality products.

The resilience of misinformation, despite efforts by the tech behemoths to eliminate it, has become a real-time case study of how the companies are constantly a step behind in stamping out the content. At every turn, trolls, conspiracy theorists and others have proved to be more adept at taking advantage of exactly what the sites were created to do - encourage people to post almost anything they want - than the companies are at catching them.

"They're not able to police their platforms when the type of content that they're promising to prohibit changes on a too-frequent basis," Jonathon Morgan, founder of New Knowledge, a company that tracks disinformation online, said of Facebook and YouTube.

The difficulty of dealing with inappropriate online content stands out with the Parkland shooting because the tech companies have effectively committed to removing any accusations that the Parkland survivors were actors, a step they did not take after other recent mass shootings, such as October's massacre in Las Vegas. In the past, the companies typically addressed specific types of content only when it was illegal - posts from terrorist organisations, for example - Morgan said.

Facebook and YouTube's promises follow a stream of criticism in recent months over how their sites can be gamed to spread Russian propaganda, among other abuses. The companies have said they are betting big on artificial intelligence systems to help identify and take down inappropriate content, though that technology is still being developed.

The companies have in the meantime hired or said they plan to hire more people to comb through what is posted to their sites. Facebook said it was hiring 1,000 new moderators to review content and was making changes to what type of news publishers would be favoured on the social network. YouTube has said it plans to have 10,000 moderators by year's end and that it is altering its search algorithms to return more videos from reliable news sources.

Mary deBree, head of content policy at Facebook, said the company had not been perfect at staving off certain content and most likely would not be in the future.

"False information is like any other challenge where humans are involved: It evolves, much like a rumour or urban legend would. It also masks itself as legitimate speech," she said. "Our job is to do better at keeping this bad content off Facebook without undermining the reason people come here - to see things happening in the world around them and have a conversation about them."

A YouTube spokesman said in a statement that the site updated its harassment policy last year "to include hoax videos that target the victims of these tragedies. Any video flagged to us that violates this policy is reviewed and then removed." For many people, getting around Facebook and YouTube's hunt to remove noxious content is straightforward. The sites have automated detection systems that often search for specific terms or images that have previously been deemed unacceptable. So to evade those systems, people sometimes can alter images or switch to different terminology.

Sam Woolley, an internet researcher at the nonprofit Institute for the Future, said far-right groups had started using internet brands to describe minorities - like "Skype" to indicate Jewish people - to trick software and human reviewers.

Those who post conspiracy theories also tend to quickly repost or engage with similar posts from other accounts, creating a sort of viral effect that can cause the sites' algorithms to promote the content as a trending topic or a recommended video, said David Carroll, a professor at the New School who studies tech platforms.

That duplication and repackaging of misinformation "make the game of snuffing it out whack-a-mole to the extreme," he said.

That game played out across the web in the past few days, after a video suggesting that one of the most vocal Parkland survivors, David Hogg, was an actor became the No. 1 trending video on YouTube. After a public outcry, YouTube removed the video and said it would take down other "crisis actor" videos because they violated its ban on bullying. YouTube has since scrubbed its site of many such videos.

Yet some of the videos remained, possibly because they used slightly different terminology. One clip that had drawn more than 77,000 views by Friday described the shooting survivors as "disaster performers" instead of "crisis actors."