SINGAPORE - Social media firms will be held to greater account to users here as Singapore looks to roll out a slew of online safety measures, including content filters and user reporting tools, to minimise local access to harmful material.

These measures - proposed under the Code of Practice for Online Safety and the Content Code for Social Media Services - received support from the public after a month-long consultation with the Ministry of Communications and Information (MCI).

The codes are expected to be rolled out as early as 2023 after they are debated in Parliament.

Over 600 members of the public - including parents, youth, academics, community and industry groups - participated in the consultation, which ended in August.

"Overall, respondents generally supported the proposals," said Minister for Communications and Information Josephine Teo in a Facebook post on Thursday.

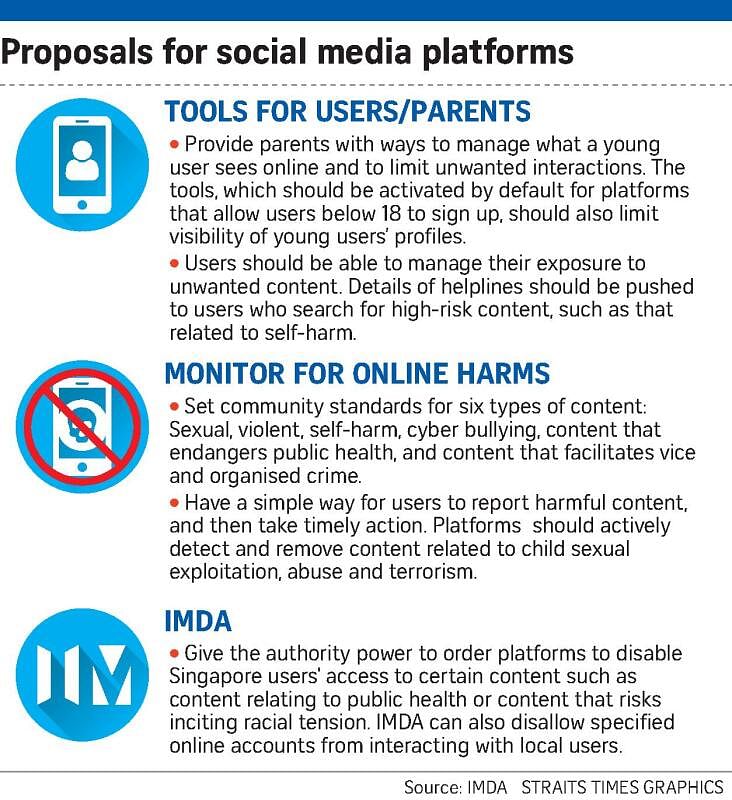

In a summary note issued on Thursday, the MCI said the public generally agreed that social media platforms, which include Facebook, Instagram and Tik Tok, will need to implement safety standards for six types of content: sexual content, violent content, self-harm content, cyber-bullying content, content that endangers public health and content that facilitates vice and organised crime.

Assuring industry groups that Singapore's approach will be outcome-based, MCI said: "Designated social media services will be given some flexibility to develop and implement the most appropriate solutions to tackle harmful online content on their services, taking into account their unique operating models."

In general, the platforms will be expected to moderate users' exposure or disable access to these types of content when users report them.

Proactive detection and removal of child sexual exploitation and abuse material, as well as terrorism content will be required. Social media platforms will also be required to push helpline numbers and counselling services to users who search for high-risk content, including those related to self-harm and suicide.

Social media platforms' treatment of user reports had created contention, which was brought up during the public consultation.

Some respondents said that objectionable content reported by them was not removed. Others wanted such platforms to be more accountable, requesting that users be updated on the decision made and be allowed to appeal against dismissed reports.

Responding, MCI said that it will require the platforms to have accessible, effective and easy-to-use user reporting mechanisms. Appropriate action must be taken "in a timely and diligent manner" as MCI had proposed. The ministry did not specify a takedown timeframe.

Platforms will also be required to produce and publish annual accountability reports on the effectiveness of their safety measures. These include metrics on how prevalent harmful content is on their platforms, user reports they received and acted on, and the process to address harmful content.

Tools that allow parents and guardians to limit who can connect with their children on social media, and filters that limit what is viewed online, will also have to be activated by default for local users below the age of 18. The aim is to minimise children's exposure to inappropriate content and unwanted interactions like online stalking and harassment.

Some members of the public suggested that social media platforms verify users' age to better protect children and implement mandatory safety tutorials. To this, MCI said: "We will continue to work with the industry to study the feasibility of these suggestions as we apply an outcome-based approach to improving the safety of young users on these services."

The new codes will fall under the Broadcasting Act, which now also contains the Internet Code of Practice. Penalties for flouting the rules have not been spelt out.

The Infocomm Media Development Authority will be granted powers to direct any social media service to block objectionable accounts or disable access to egregious content for users in Singapore.

During the consultation, industry groups asked for flexibility on the timelines for social media firms to remove the content. To this, MCI said: "The timeline requirements for social media services to comply with the directions will take into account the need to mitigate users' exposure to the spread of egregious content circulating on the services."

Thanking the public for their feedback, Mrs Teo said: "Many also highlighted that regulations need to be complemented by public and user education to guide users in dealing with harmful online content, and engaging with others online in a safe and respectful manner."