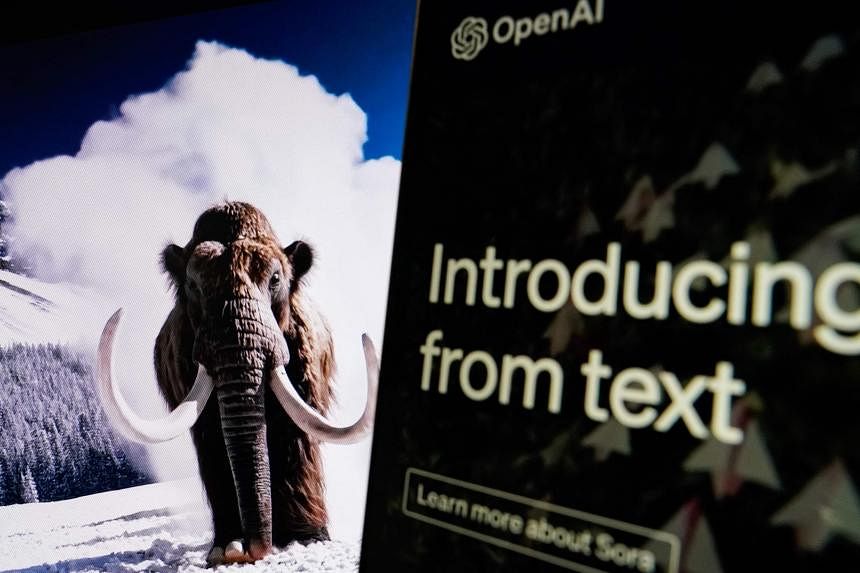

SINGAPORE – The imminent launch of Sora, a new video creation tool from ChatGPT maker OpenAI that can generate realistic scenes based on simple text, has set a new standard for AI-generated videos.

It will also likely make it tougher to spot AI-generated content and, along with it, deepfakes.

OpenAI on Feb 15 revealed a series of clips made by Sora, an artificial intelligence model trained to generate videos based on short sentences.

Its developers are holding off a public launch for now to collect feedback from the public and develop safety measures to prevent the abuse of Sora. But experts foresee it being tougher for the untrained eye to spot AI-generated content and expressed concerns over a lack of technology that can proactively scan for deepfakes.

The Straits Times worked with AI experts to analyse OpenAI’s showcase videos and spot subtle clues that they are fake.

Unnatural lighting

Look out for inconsistencies in a scene’s lighting, which appears to be something text-to-video AI models will struggle with, going by the preview videos from Sora, said National University of Singapore (NUS) School of Computing Associate Professor Terence Sim.

In a scene of Tokyo created by Sora, the sky is overcast but the water’s surface depicts a sunset, said Prof Sim, who is a co-primary investigator at NUS’ Centre for Trusted Internet and Community.

Scenes created by AI typically do not present as much variation in lighting conditions – or dynamic range – compared with real footage, he noted. “Bright and dark regions in the image do not vary by much, resulting in a ‘flat’ illumination,” said Prof Sim.

Hands

As with AI-generated images, Sora seems to struggle with animating hands, which are oddly rendered in a scene depicting characters clapping during a birthday celebration.

AI models tend to generate too many or too few fingers, and the position of the hands relative to the body looks wrong, said Prof Sim.

Awkward movement

One of Sora’s headlining videos, of a stylish-looking woman walking through a neon-lit Tokyo street, demonstrates just how tricky it is to catch AI-made videos, said Prof Sim.

“The model’s skin is very realistic, there are imperfections and blemishes, when previously, AI skin was too smooth and looked artificial,” he said. “With Sora, the realism has gone up, and it is getting harder to spot (AI-made videos).”

But there are still subtle signs that the video is fake, such as the unnatural movement of people in the background, who appear to be gliding across the street.

Hair, too, provides clues, as the strands do not sway naturally when the woman turns her head, said Prof Sim.

In a separate video of five wolf pups chasing one another, the animated animals appear to blend into one another as the AI seems unable to distinguish one figure from another. A similar issue is seen in a video of a basketball, which appears to pass through the netting of a hoop.

Pay attention to strange flickering and body movements, said Mr Lee Joon Sern, senior director of machine learning and cloud research at Ensign InfoSecurity’s Ensign Labs.

Significant inconsistencies served as clear indicators of deepfakes, as observed in the case of political figures in Singapore amid a series of deepfake incidents that emerged in late 2023.

Playing catch-up

Researchers globally are working on tools to counter the risks of AI-made content, including the use of software to analyse videos for signs of AI, such as looking at patterns in a video’s audio track and cross-referencing against other sources for discrepancies, Mr Lee said.

Others are looking into metadata analysis, unpacking a digital file’s data to trace its origin and authenticity, he added.

Regulation and penalties for using AI for fraud are key steps to rein in the technology, said Mr Chris Boyd, staff research engineer from cyber-security firm Tenable.

The European Union has passed the AI Act, which will require creators to label AI-generated content with digital watermarks.

Singapore has set aside $20 million for a research drive to counter deepfakes, including the use of watermarking technology.

As with metadata analysis, it is yet to be seen how these measures will be applied to regulate AI.

Watermarking must first be available on the platform used to create synthetic content, and it falls on the creators to declare it, said Mr Boyd.

There is currently a lack of technology that proactively flags AI-generated content, he added. Platforms will also need in-built software to read watermarks and signal them to audiences if the labels are embedded in an image’s pixels.

Countering deepfakes is not entirely a technology problem, as viewers often have to consider their own biases when faced with deepfakes – especially in a year when much of the world is undergoing political elections, said Mr Boyd.

“Many of the older deepfakes videos we have seen were terribly made, but people still fell for them, even when they were told that they were fake,” he said. “It’s not all about technical solutions. We are often reliant on our own biases in determining fake from real.”